Simulating Quantum Circuits on GPUs

By Joseph Lee on June 12, 2023

The field of quantum computing is developing at a rapid pace, with exciting developments in every sub-field including hardware development and algorithm design.

The current era of Noisy Intermediate Scale Quantum (NISQ) computers may limit the size of problems we are able to solve on actual quantum hardware, we are still identifying more and more use cases which may benefit from the limited quantum resources, especially by utilizing both quantum and classical HPC hardware via hybrid algorithms. It is also vital to be able to simulate (using classical computers) the behaviour of quantum circuits as performed on quantum computers. One reason for doing so is to provide verification that the quantum hardware is performing as expected, up to the limits of the classical simulation. Another is that it allows us to test and research quantum algorithms, as the translation from theoretical algorithms to actual implementations always introduce complications.

Here at the EPCC Quantum Applications Group we are very interested in investigating the use of HPC for efficient simulation of quantum circuits and algorithms. To capture the fundamentally quantum properties of superposition and entanglement, it is not surprising that a classical simulation of these quantum circuits becomes extremely (exponentially) costly for many qubits with many gate operations. There are two main methods for simulating quantum circuits: state vector simulation and tensor networks. State vector simulation essentially tracks the entire quantum state through each gate operation of a circuit, and is particularly useful for simulating deep or highly entangled quantum circuits. Unfortunately, the memory requirement for state vector simulation scales exponentially with the number of qubits. On the other hand, tensor network methods scale with the amount of entanglement generated by the circuit and the number of gates, making it possible to simulate quantum circuits with a very large number of qubits by sacrificing some entanglement structure.

We have recently demonstrated the ability to perform a state vector simulation of up to 44 qubits on 4096 CPU nodes on ARCHER2 using the QuEST library. It seems only appropriate to ask can we, and should we, simulate quantum circuits on GPUs, which are now being utilised by so many fields of scientific computation?

Indeed we can, and in this blog post we will discuss what libraries are currently available, and can be used on Cirrus, the EPSRC Tier-2 HPC facility housed at EPCC. There are in fact many python SDK for quantum circuit simulation, most notably Google’s Cirq and IBM’s Qiskit (a more comprehensive list). While they may be easy to use, especially for smaller circuits, here we focus on C++ simulation libraries which are more configurable on HPC systems.

If you are new to quantum computing, we recommend online resources such as the Qiskit Textbook , or the NQCC online quantum skills course. If you prefer traditional textbooks, then Nielsen & Chuang’s Quantum Computation and Quantum Information is the standard for the field .

Libraries

The three libraries we investigated are cuQuantum, QCLAB++, and QuEST.

cuQuantum is Nvidia’s package for quantum circuit simulation, optimized for their GPU line up. It contains two libraries: cuStateVec and cuTensorNet, used for state vector and tensor network simulations respectively. cuQuantum provides a low-level C++ API which gives programmers the most degree of control, and is reasonably user-friendly for developers familiar with CUDA programming especially for single-GPU simulations. The package also provides a Python interface, which on top of providing bindings to the C++ functions, also includes a high-level API with support for ndarray-like objects from other Python modules such as NumPy and PyTorch, as well as providing extra high-level functions such as contract(). cuQuantum can also be used as the backend for other simulation tools including Cirq and Qiskit.

QCLAB++ is a GPU-accelerated C++ quantum circuit simulation package developed by the Lawrence Berkeley National Laboratory. QCLAB++ is designed to be light-weight and portable, and uses OpenMP offloading for GPU acceleration. This means that the library has no external dependency, which is beneficial for quick prototyping. As a result, the code is also simpler, with the CPU and GPU implementation of algorithms being the same except for different OpenMP #pragma statements.

QuEST is a quantum circuit simulation tool developed by the QTechTheory group at the University of Oxford. On top of pure state vectors, QuEST also supports density matrices for simulating noisy quantum computers. QuEST provides multithreading and distributed parallelisation with OpenMP and MPI, and we have recently benchmarked it on EPCC’s ARCHER2 up to 44 qubits on 4096 CPU nodes. QuEST also utilises CUDA to provide acceleration on Nvidia GPUs.

Single-GPU results:

We use the Quantum Fourier Transform (QFT) circuit as our timing benchmark. QFT is a part of many important quantum algorithms, including Shor’s algorithm for finding prime factors. The circuit consists of Hadamard gates and controlled phase gates, with the number of gates scaling as O(n2) for n qubits.

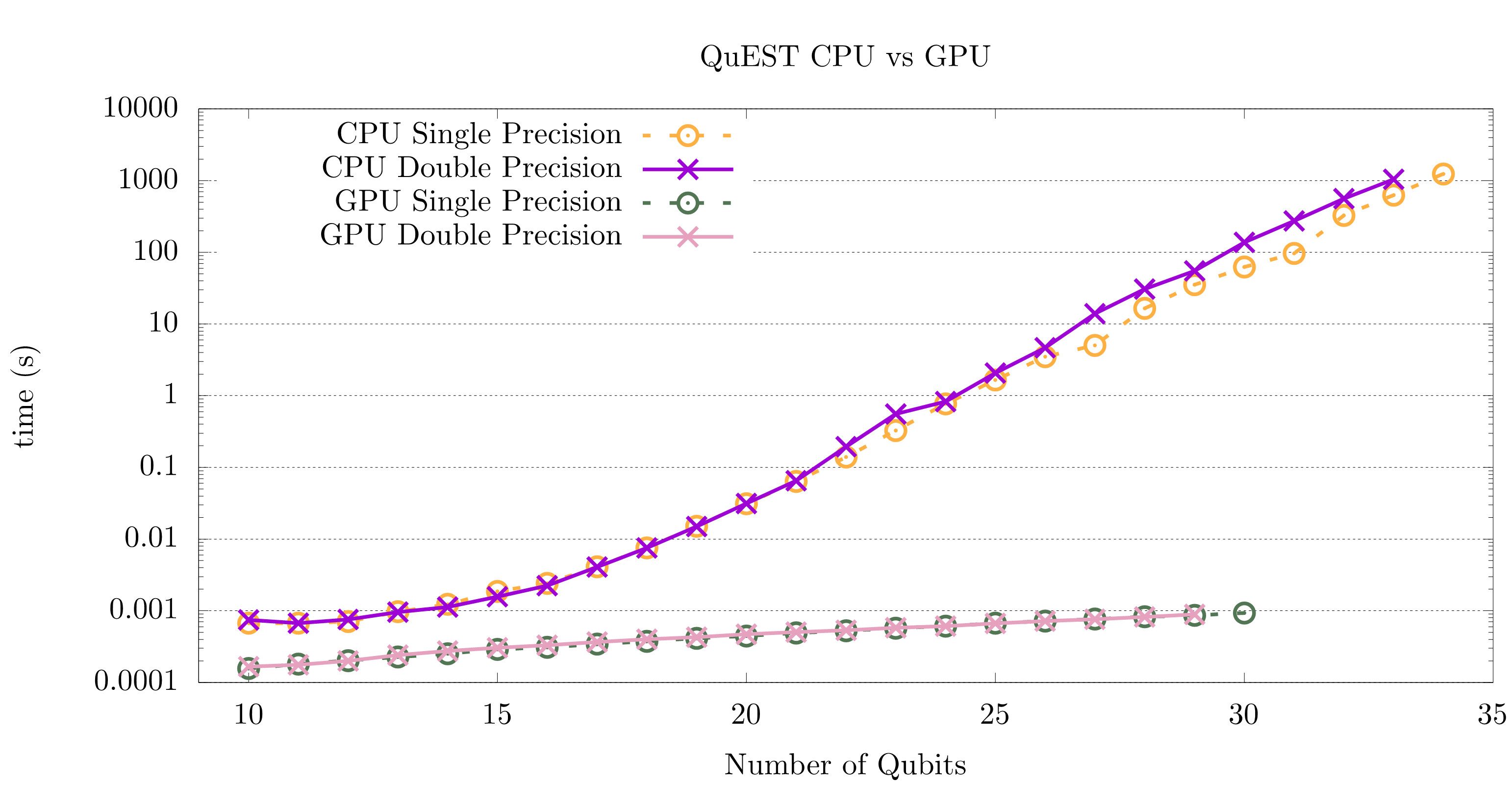

We first compare the performance of simulating on CPU vs GPU. The test is performed on Cirrus , where we use a single Nvidia V100 (16GB) GPU against a single CPU node with 256GB memory and 36 cores with multithreading via OpenMP. With greater memory, a single CPU-node simulation can simulate 6 more qubits than on a single GPU with QuEST. However, the speedup using GPU is extremely impressive, ranging from 3.5x for 12-qubits, to over 67000x for 30-qubits.

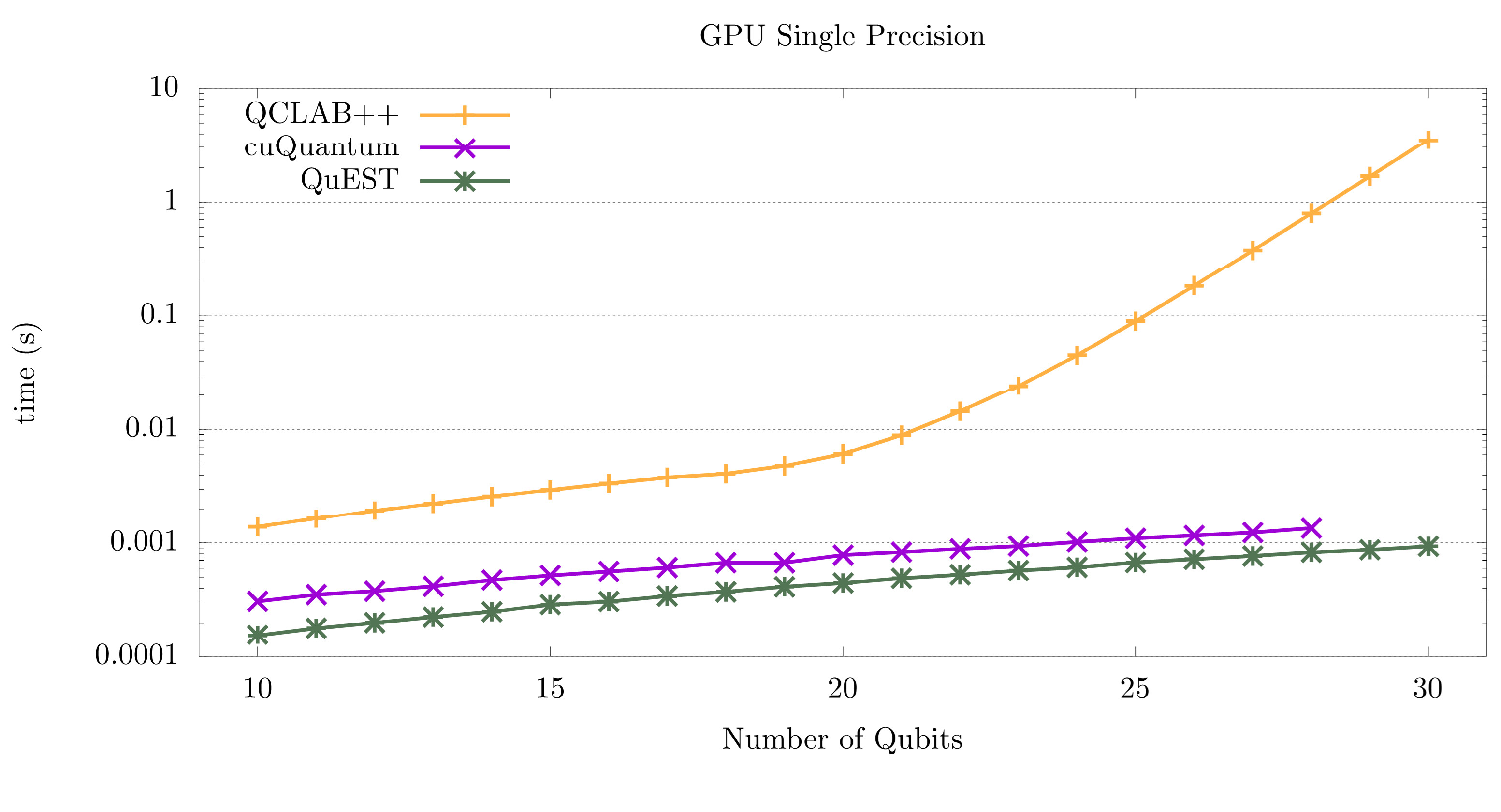

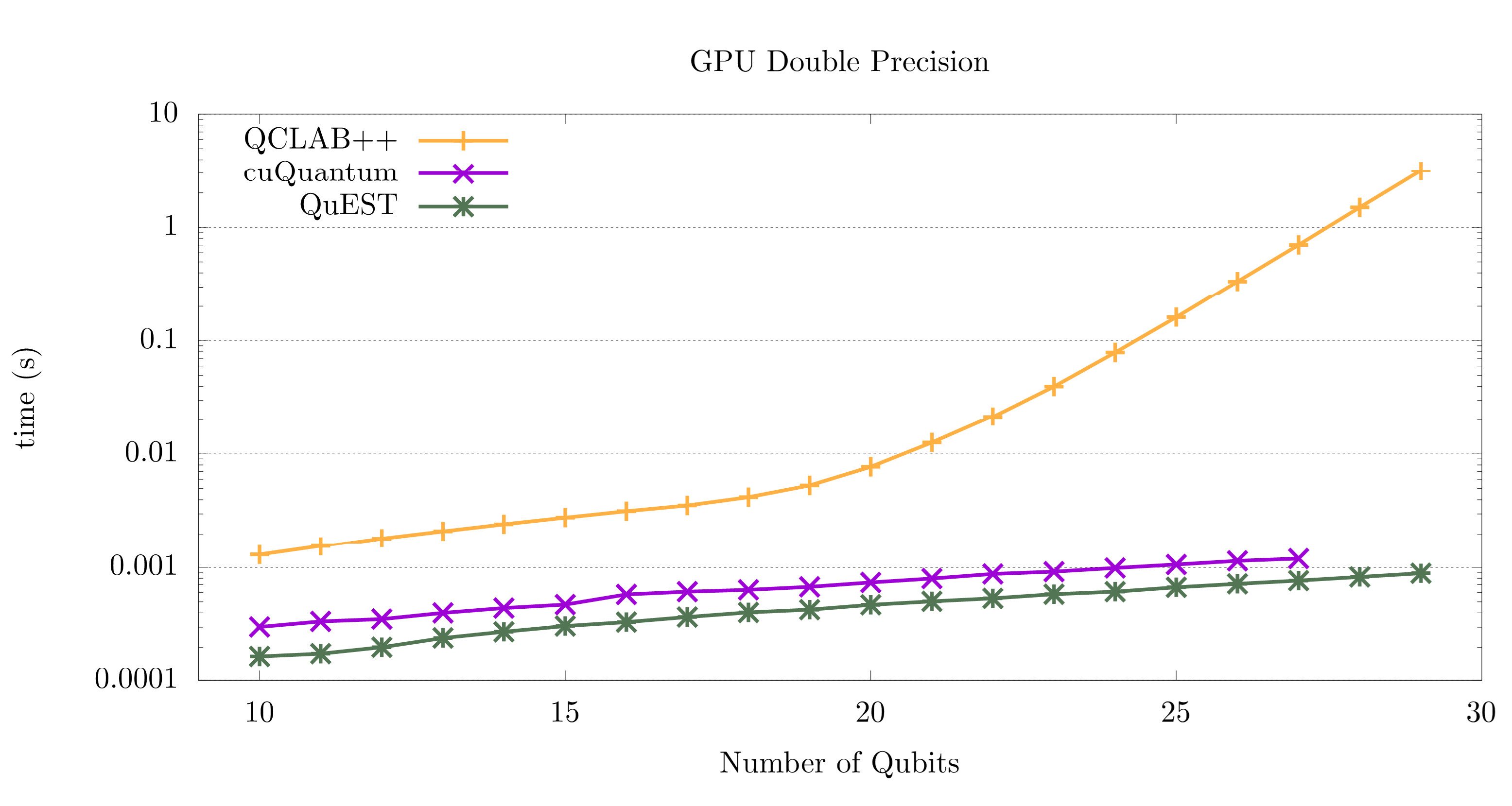

Next, we compare how the three libraries perform on a single GPU. QCLAB++ and QuEST are both able to simulate more qubits than cuQuantum. In terms of runtime, QuEST is the fastest, about 30-50% faster than cuQuantum; whereas QCLAB++ is about 10 times slower than QuEST for fewer than 20 qubits, and the scaling is significantly worse for above 20 qubits. We also see that performing the simulation in single precision approaches 2x faster than double precision for QCLAB++, whereas it does not make much difference for cuQuantum and QuEST. On the CPU, the performance difference between single and double precision in QuEST increases with more qubits.

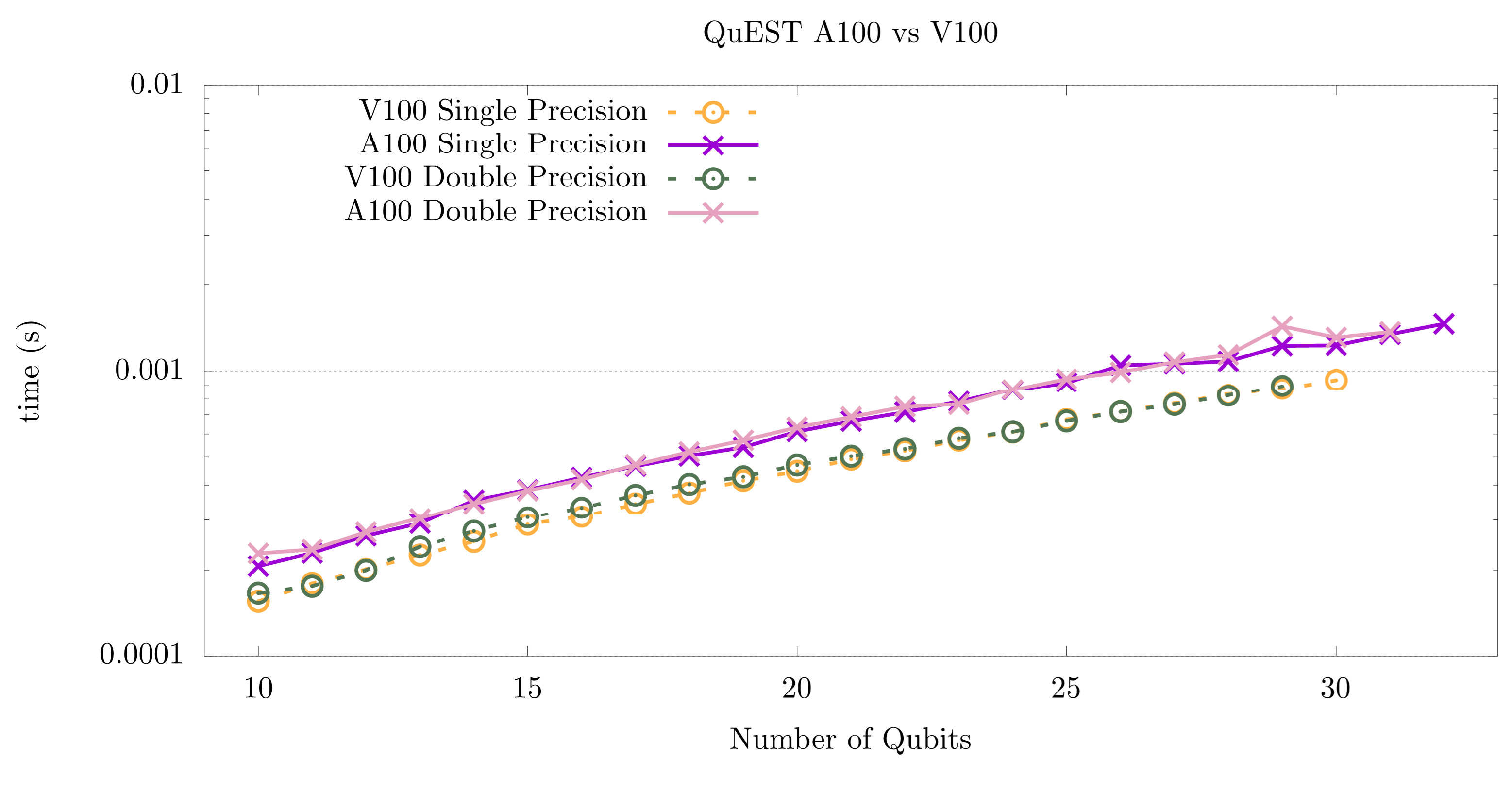

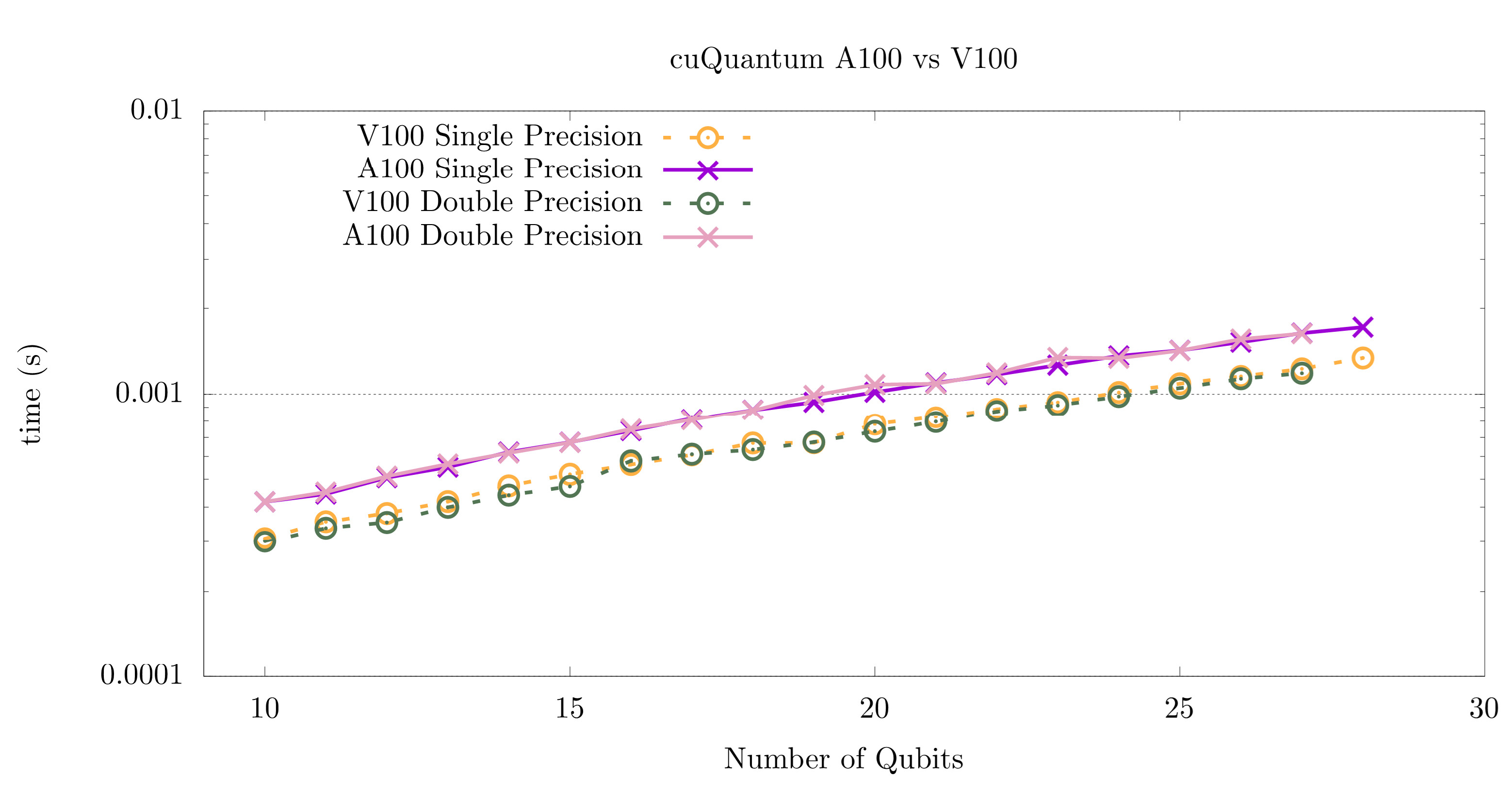

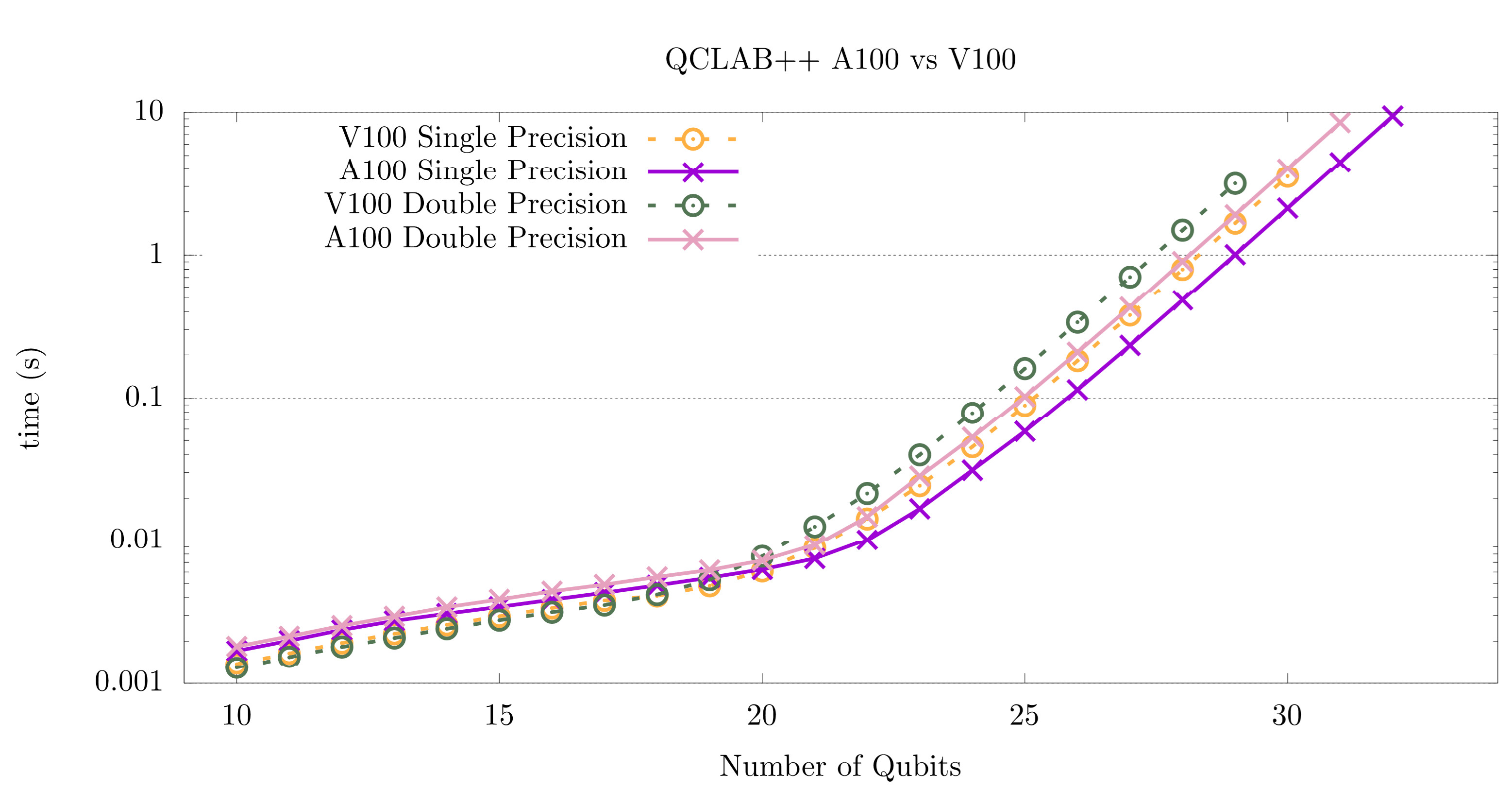

We also compared the performance of the libraries on the A100 GPU hosted by NextGenIO , which is a newer generation with larger memory (40GB). The performance of cuQuantum and QuEST seem slightly slower on the A100, but is up to 40% faster for simulations with QCLAB++.

Multi-GPU and Multi-node

As we can see, once the simulation approaches approximately 30 qubits the state vectors can no longer be stored on a single GPU; multiple GPUs, potentially across multiple nodes will be required for simulating larger systems. There is currently significant momentum towards improving GPU simulations of quantum circuits.

Currently QCLAB++ and QuEST do not support multi-GPU execution. cuQuantum supports multi-gpu; however, writing multi-GPU operations via the C++ API is cumbersome, due to the lack of support for cross-GPU gate application. However, using the Python API, it is possible to run across multiple GPUs on the same node, or multiple nodes with MPI (mpi4py).

Where do we go from here?

Surveying the broader landscape, the quantum computing community realises the need for an open, interoperable, hardware-agnostic programming model across the entire quantum ecosystem, especially for a future with hybrid quantum-classical computing architectures. There are already steps taken towards this goal, including the development of the QASM programming language (e.g. IBM’s implementation OpenQASM ) and XACC . More recently, the QIR Alliance is a joint effort to develop and define an intermediate representation for quantum programming based on LLVM IR. By agreeing to and adopting a quantum programming standard, users will be able to pick and choose the back-end of their choice, smoothly transitioning from a classical CPU or GPU simulation to actual quantum hardware. There are already compilers and simulators with QIR support emerging, such as QIR Alliance’s QCOR and NWQSim , as well as Nvidia’s CUDA Quantum NVQ++ . We see a glimpse of an exciting new paradigm for computing with heterogeneous quantum processors, and we will be testing these new tools in due course.

Available on Cirrus

The cuQuantum and QuEST libraries will be made available as modules on Cirrus.